Junkyard Python Scraper

Short Python code that scrapes local junkyard's website and saves it in CSV format

IT

9/28/20251 min read

Scraping Junkyard Inventory for Parts Deals

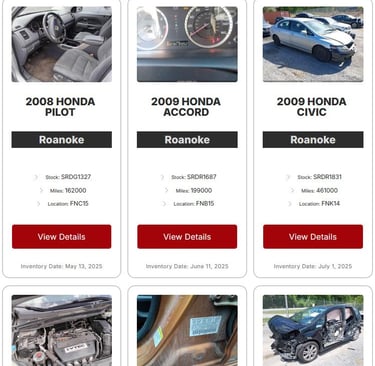

I wanted a faster way to see what was showing up at the local junkyard without clicking through their website every day. So I built a Python scraper that pulls their inventory, saves it to a CSV, and updates whenever I run it.

The script grabs car listings (make, model, year, yard location, arrival date) and puts them in a format I can actually search and filter. That makes it easy to see when something useful shows up. I also set it up to save data incrementally, so I can look back and see how long cars stick around or if certain parts are worth pulling before they’re gone.

This kind of tool saves time and money. Instead of driving to the yard or digging through dozens of web pages, I’ve got the info in one place. It’s also flexible — I can filter for specific models, track trends, or even decide which yard is worth visiting first.

Just like with my car projects, the key was taking something tedious and making it more efficient with the right tools. The scraper doesn’t pull parts for me, but it makes the hunt a lot smarter.